In one of my earlier posts, I explained how I set up pfSense with my Proxmox homelab using a simple networking topology. That setup worked flawlessly until a recent incident brought everything down.

The trouble started when I noticed my ACME certificate had expired. Since there was a new version of the ACME plugin available, I decided to upgrade. Unfortunately, the upgrade process froze, and I lost access to the pfSense WebUI. With no way back into the admin console, I had no choice but to restart pfSense.

That restart had serious consequences: the system became stuck in a reboot loop and never came back online. In the end, I had to reinstall pfSense entirely. Luckily, I had configuration backups, so restoring my settings wasn’t tricky. But soon after, I discovered something unusual: Proxmox would no longer allow me to tag VMs with VLAN IDs.

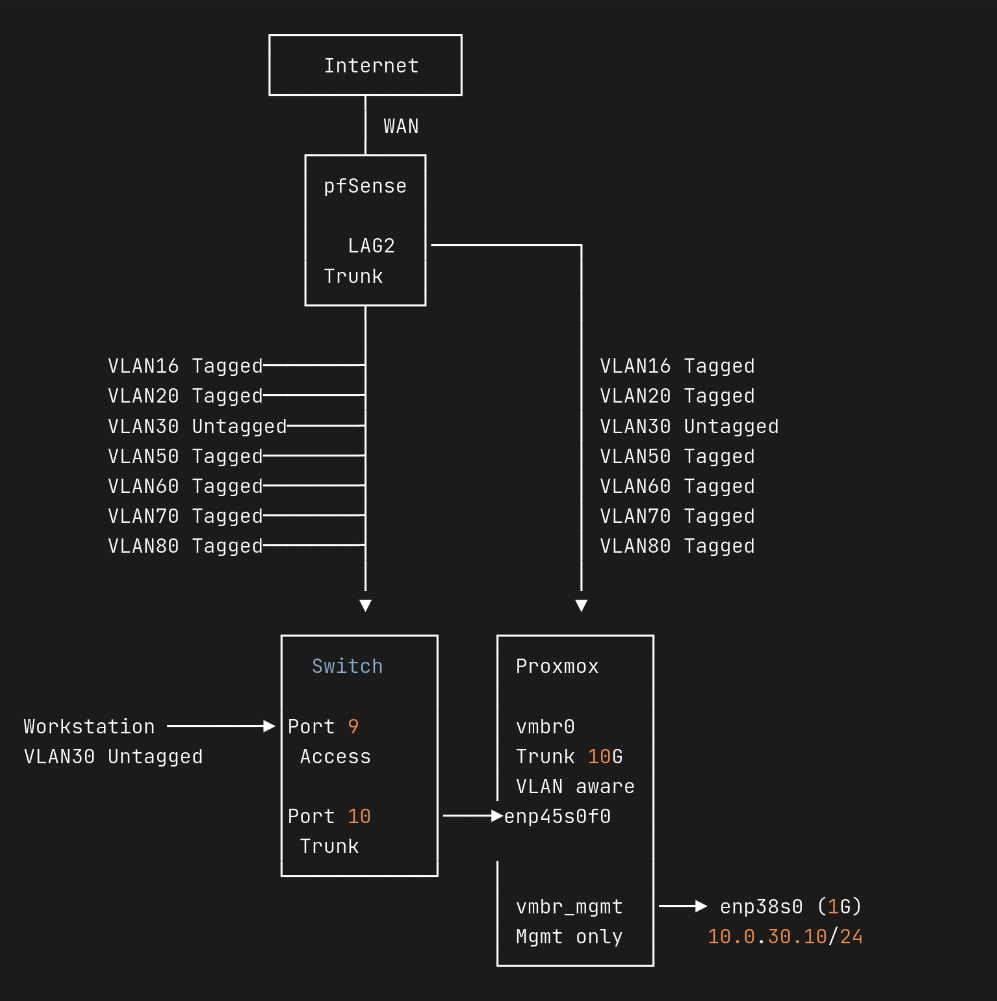

So here is my old setup-

Pre-Crash Setup (Old Design)

- Proxmox bridge design:

- vmbr0 (management + VM traffic on the same bridge).

auto lo

iface lo inet loopback

auto enp45s0f0

iface enp45s0f0 inet manual

auto vmbr0

iface vmbr0 inet static

address 10.0.30.10/24

gateway 10.0.30.1

bridge-ports enp45s0f0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

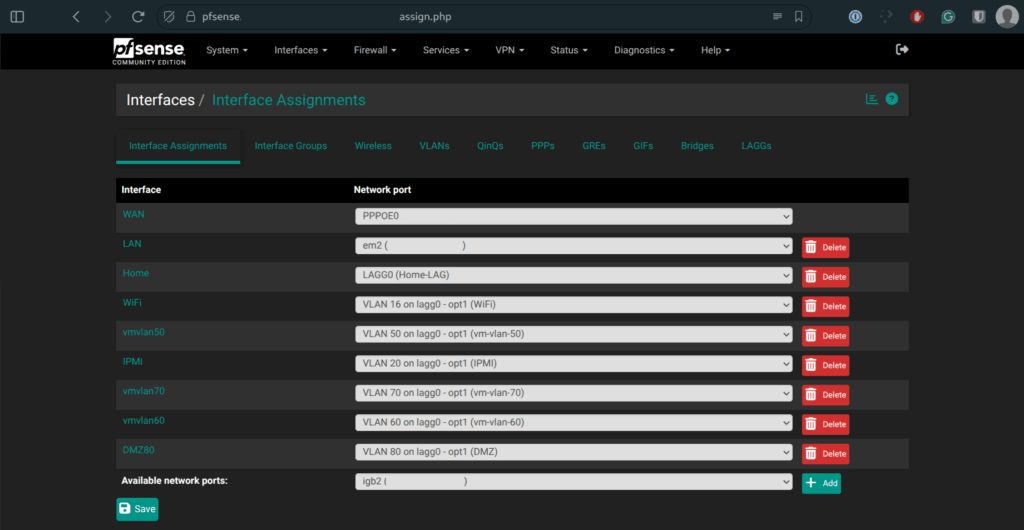

bridge-vids 2-4094PfSence VLAN Interfaces:

- Home: LAG0 (Native/home VLAN)

- WiFi: VLAN 16 on LAG0

- IPMI: VLAN 20 on LAG0

- vmvlan50: VLAN 50 (for VMs) on LAG0

- vmvlan60: VLAN 60 (for VMs) on LAG0

- vmvlan70: VLAN 70 on LAG0

- DMZ80: VLAN 80 (for DMZ services) on LAG0

Each VLAN is isolated via firewall rules and optionally routed via NAT, depending on the need.

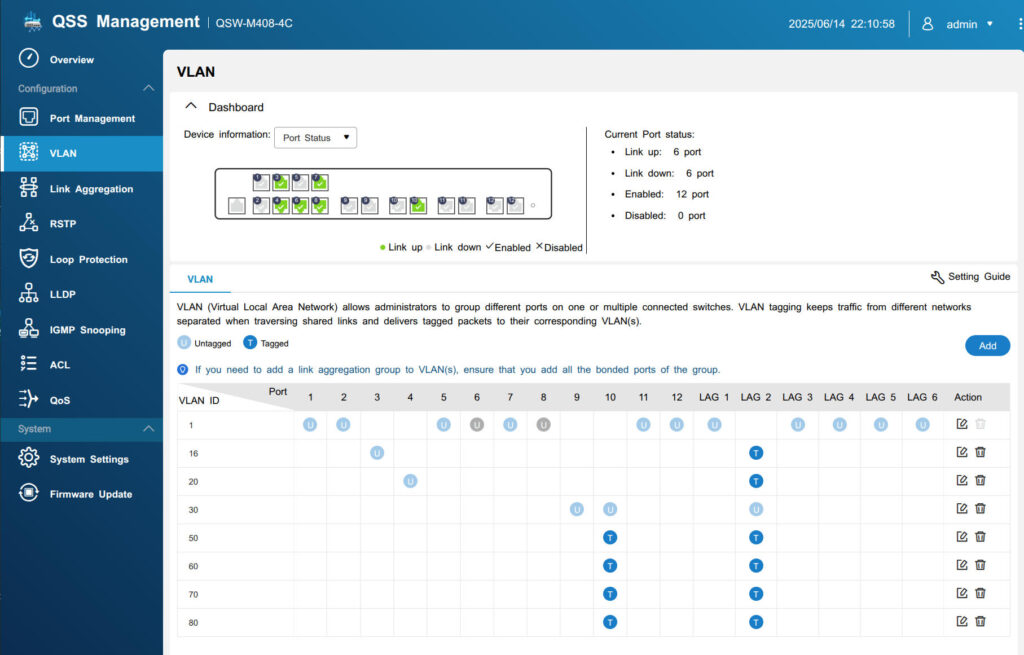

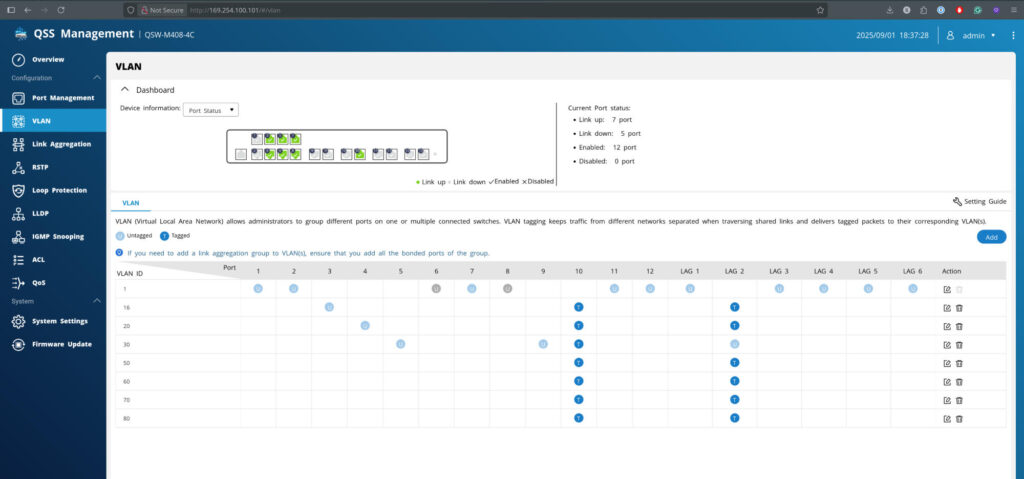

My managed switch VLAN setup

Post-Crash Setup (New Design)

So now the question is how my old setup worked (single VLAN-aware bridge)

- I had one bridge (vmbr0) in Proxmox, VLAN-aware enabled.

- On my VMs, I set the VLAN tag (e.g. 60, 70, etc.).

- The switch port (to Proxmox) was trunked, so the tags passed straight through to pfSense.

- pfSense had interfaces for each VLAN, so DHCP + routing just worked.

What changed after the pfSense crash

- When the ACME plugin stalled, pfSense package management went sideways, and I ended up having to reinstall/restore.

- After restoration, some things didn’t line up 1:1 with my old config:

- VLAN interfaces in pfSense may have been recreated in a different order.

- The backup could have carried over old interface UUIDs or sysctls that didn’t match my new install.

- The switch LAG settings remained unchanged, but the Proxmox bridge + pfSense interface mapping was now “off by one.”

That explains why, after re-enabling VLAN Aware in Proxmox following the crash, VMs stopped receiving DHCP. pfSense was no longer matching the VLANs as it had before.

How did it only blow up after ACME crashed

- The certificate itself wasn’t the cause of the crash.

- The ACME plugin being stuck forced me into upgrades/reinstall, which is when the interface mapping changed.

- If ACME hadn’t expired, I might have continued happily with the old pfSense config. Still, at some point (an upgrade, a NIC driver change, or another crash), the mismatch between old VLAN mappings and current Proxmox bridge handling would’ve surfaced anyway.

In other words, ACME didn’t cause the VLAN issue; it just forced the timing of when I discovered it.

After moving to my current setup, things got better. The DHCP started working again, and all the issues I had after restoring from the old config went away.

But the strange thing is that I used the same old pfSense config for this new setup.

The snag is that even with an identical XML, a few things can still change underneath and make the “same” config behave differently.

Here are the usual culprits that explain what I saw:

Why does the same backup act differently

- Interface re-enumeration / MAC mapping

- After a reboot/upgrade/restoration, pfSense can see NICs in a different order (driver updates, LAG re-creation, PCI slot timing, etc.).

- The backup indicates “LAN = lagg0.30”, but if lagg0 now points to a slightly different underlying member or pfSense re-maps ports by MAC, assignments, and VLAN parents, the assignments can misalign until you re-assign them.

- LAG/VLAN parent recreated “not quite the same”

- lagg0 exists, but member order (ix0/ix1) or LACP state differs; frames still pass, but tags/natives might not match my switch expectations until the bond settles or is re-applied.

- Packages & versions after restore

- ACME + other packages aren’t restored with their exact runtime state. On first boot, they reinstall (sometimes to newer versions). That can trigger filter reloads, rc scripts, or service restarts that momentarily turn off interfaces or dhcpd on a VLAN tab.

- Per-VLAN subinterfaces vs VLAN-aware bridge (Proxmox side)

- Before the crash, I had a clean single VLAN-aware bridge. Afterwards, there were leftover enp45s0f0.X subinterfaces plus a VLAN-aware bridge. That split the path for tagged frames and black-holed DHCP until we removed the per-VLAN links.

- Also, the bridge-vids line initially had an inline comment, so no VLANs were actually allowed; the bridge dropped tagged frames.

- Switch “native/untagged” details.

- My LAG had VLAN 30 untagged (native). If during restore/changes the native VLAN on one side didn’t match the other, VLAN 30 traffic went to the wrong place until I put both ends back in agreement.

- Services inherit interface state.

- Even with the same XML, if an interface shows as “down” or not yet assigned, the DHCP server for that interface won’t start. Once I re-enabled each VLAN interface and restarted VMs (renewing leases), everything lit up.

Why it worked before the crash

Everything happened to line up: NIC mapping, LAG, switch native VLAN, Proxmox bridge, and pfSense VLAN assignments. The ACME mess didn’t cause the VLAN problem; it just pushed you into an upgrade/restore path where those fragile assumptions broke.

Why the new layout is safer (even with the same backup)

- Out-of-band mgmt on 1G (untagged VLAN 30) means I keep GUI/SSH regardless of trunk issues.

- Single, clean VLAN-aware trunk on 10G, no per-VLAN subinterfaces.

- I explicitly verified bridge-vids using the bridge vlan show.

- Switch trunks/access are clearly defined (port 10 tagged, port 9 untagged 30, LAG2 tagged + native where required).

- I restarted VMs to rebuild tap devices/leases, removing stale paths.

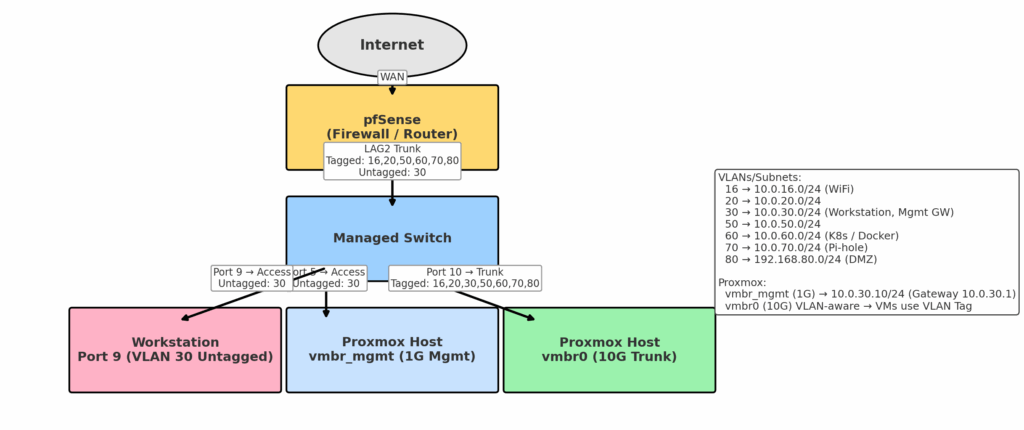

So here is what my new setup looks like-

Current Setup

Proxmox

- Mgmt Bridge (vmbr_mgmt)

- Interface: enp38s0 (1G NIC)

- IP: 10.0.30.10/24

- Gateway: 10.0.30.1

- Purpose: Out-of-band management (GUI, SSH).

- Always reachable even if trunk VLANs break.

- VM Trunk Bridge (vmbr0)

- Interface: enp45s0f0 (10G NIC)

- VLAN-aware: Yes

- No IP assigned (pure trunk).

- Carries VLANs: 16, 20, 30, 50, 60, 70, 80 → pfSense.

auto lo

iface lo inet loopback

# --- 10G VM trunk (no IP, VMs tag at NIC) ---

auto enp45s0f0

iface enp45s0f0 inet manual

# 10G uplink to switch (trunk)

auto vmbr0

iface vmbr0 inet manual

bridge-ports enp45s0f0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 16 20 50 60 70 80

# VLANs i use on this trunk

# --- 1G management (GUI/SSH) ---

auto enp38s0

iface enp38s0 inet manual

# 1G uplink to switch (access VLAN 30)

auto vmbr_mgmt

iface vmbr_mgmt inet static

address 10.0.30.10/24

gateway 10.0.30.1

bridge-ports enp38s0

bridge-stp off

bridge-fd 0pfSense

- WAN: unchanged. PPPoe With ISP Username and Password.

- LAN/VLAN Interfaces:

- VLAN16 → 10.0.16.1/24

- VLAN20 → 10.0.20.1/24

- VLAN30 → 10.0.30.1/24 (Workstation VLAN)

- VLAN50 → 10.0.50.1/24

- VLAN60 → 10.0.60.1/24

- VLAN70 → 10.0.70.1/24 (Pi-hole)

- VLAN80 → 192.168.80.1/24 (DMZ)

- DHCP is enabled per VLAN. PiHole in 10.0.70.17 serves as the primary DNS in PfSense, with the secondary DNS being the gateway of that VLAN.

- Firewall rules:

- Each VLAN: allow VLAN net → any. (See pfSense for more detailed info)

- NAT: outbound NAT automatic → all VLANs NAT to WAN.

Switch Config

- Port 9 (Workstation, my Fedora):

- VLAN30 Untagged (access port).

- Port 10 (Proxmox trunk):

- VLANs 16,20,30,50,60,70,80 Tagged.

- LAG2 (pfSense trunk):

- VLANs 16,20,50,60,70,80 Tagged.

- VLAN30 Untagged → needed for workstation (my Fedora) VLAN to work.

Network Diagram

Here’s a diagram that shows everything:

New Switch VLAN Config

Conclusion

This incident made me aware of the fragility of homelab networking, where everything hinges on a single configuration aligning perfectly. What looked like a routine ACME upgrade ended up exposing deeper issues with VLAN mappings, interface assignments, and switch configurations.

By separating management traffic onto its own bridge, cleaning up the Proxmox VLAN design, and verifying switch port settings, I’ve now got a setup that’s far more resilient. Even if pfSense crashes again, I won’t lose access to my Proxmox node or my management network.

The key lesson is that backups alone aren’t enough; you also need to understand how interfaces, VLANs, and bridges interact underneath. That knowledge helps turn a failure into an opportunity to build something more reliable.